mirror of

https://github.com/mendableai/firecrawl.git

synced 2024-11-16 03:32:22 +08:00

Merge branch 'main' into pr/623

This commit is contained in:

commit

48eb6fc494

2

.github/ISSUE_TEMPLATE/bug_report.md

vendored

2

.github/ISSUE_TEMPLATE/bug_report.md

vendored

|

|

@ -1,7 +1,7 @@

|

||||||

---

|

---

|

||||||

name: Bug report

|

name: Bug report

|

||||||

about: Create a report to help us improve

|

about: Create a report to help us improve

|

||||||

title: "[BUG]"

|

title: "[Bug] "

|

||||||

labels: bug

|

labels: bug

|

||||||

assignees: ''

|

assignees: ''

|

||||||

|

|

||||||

|

|

|

||||||

2

.github/ISSUE_TEMPLATE/feature_request.md

vendored

2

.github/ISSUE_TEMPLATE/feature_request.md

vendored

|

|

@ -1,7 +1,7 @@

|

||||||

---

|

---

|

||||||

name: Feature request

|

name: Feature request

|

||||||

about: Suggest an idea for this project

|

about: Suggest an idea for this project

|

||||||

title: "[Feat]"

|

title: "[Feat] "

|

||||||

labels: ''

|

labels: ''

|

||||||

assignees: ''

|

assignees: ''

|

||||||

|

|

||||||

|

|

|

||||||

40

.github/ISSUE_TEMPLATE/self_host_issue.md

vendored

Normal file

40

.github/ISSUE_TEMPLATE/self_host_issue.md

vendored

Normal file

|

|

@ -0,0 +1,40 @@

|

||||||

|

---

|

||||||

|

name: Self-host issue

|

||||||

|

about: Report an issue with self-hosting Firecrawl

|

||||||

|

title: "[Self-Host] "

|

||||||

|

labels: self-host

|

||||||

|

assignees: ''

|

||||||

|

|

||||||

|

---

|

||||||

|

|

||||||

|

**Describe the Issue**

|

||||||

|

Provide a clear and concise description of the self-hosting issue you're experiencing.

|

||||||

|

|

||||||

|

**To Reproduce**

|

||||||

|

Steps to reproduce the issue:

|

||||||

|

1. Configure the environment or settings with '...'

|

||||||

|

2. Run the command '...'

|

||||||

|

3. Observe the error or unexpected output at '...'

|

||||||

|

4. Log output/error message

|

||||||

|

|

||||||

|

**Expected Behavior**

|

||||||

|

A clear and concise description of what you expected to happen when self-hosting.

|

||||||

|

|

||||||

|

**Screenshots**

|

||||||

|

If applicable, add screenshots or copies of the command line output to help explain the self-hosting issue.

|

||||||

|

|

||||||

|

**Environment (please complete the following information):**

|

||||||

|

- OS: [e.g. macOS, Linux, Windows]

|

||||||

|

- Firecrawl Version: [e.g. 1.2.3]

|

||||||

|

- Node.js Version: [e.g. 14.x]

|

||||||

|

- Docker Version (if applicable): [e.g. 20.10.14]

|

||||||

|

- Database Type and Version: [e.g. PostgreSQL 13.4]

|

||||||

|

|

||||||

|

**Logs**

|

||||||

|

If applicable, include detailed logs to help understand the self-hosting problem.

|

||||||

|

|

||||||

|

**Configuration**

|

||||||

|

Provide relevant parts of your configuration files (with sensitive information redacted).

|

||||||

|

|

||||||

|

**Additional Context**

|

||||||

|

Add any other context about the self-hosting issue here, such as specific infrastructure details, network setup, or any modifications made to the original Firecrawl setup.

|

||||||

3

.github/workflows/ci.yml

vendored

3

.github/workflows/ci.yml

vendored

|

|

@ -28,7 +28,8 @@ env:

|

||||||

HYPERDX_API_KEY: ${{ secrets.HYPERDX_API_KEY }}

|

HYPERDX_API_KEY: ${{ secrets.HYPERDX_API_KEY }}

|

||||||

HDX_NODE_BETA_MODE: 1

|

HDX_NODE_BETA_MODE: 1

|

||||||

FIRE_ENGINE_BETA_URL: ${{ secrets.FIRE_ENGINE_BETA_URL }}

|

FIRE_ENGINE_BETA_URL: ${{ secrets.FIRE_ENGINE_BETA_URL }}

|

||||||

|

USE_DB_AUTHENTICATION: ${{ secrets.USE_DB_AUTHENTICATION }}

|

||||||

|

ENV: ${{ secrets.ENV }}

|

||||||

|

|

||||||

jobs:

|

jobs:

|

||||||

pre-deploy:

|

pre-deploy:

|

||||||

|

|

|

||||||

8

.github/workflows/fly-direct.yml

vendored

8

.github/workflows/fly-direct.yml

vendored

|

|

@ -1,7 +1,7 @@

|

||||||

name: Fly Deploy Direct

|

name: Fly Deploy Direct

|

||||||

on:

|

on:

|

||||||

schedule:

|

schedule:

|

||||||

- cron: '0 */2 * * *'

|

- cron: '0 * * * *'

|

||||||

|

|

||||||

env:

|

env:

|

||||||

ANTHROPIC_API_KEY: ${{ secrets.ANTHROPIC_API_KEY }}

|

ANTHROPIC_API_KEY: ${{ secrets.ANTHROPIC_API_KEY }}

|

||||||

|

|

@ -22,7 +22,13 @@ env:

|

||||||

SUPABASE_SERVICE_TOKEN: ${{ secrets.SUPABASE_SERVICE_TOKEN }}

|

SUPABASE_SERVICE_TOKEN: ${{ secrets.SUPABASE_SERVICE_TOKEN }}

|

||||||

SUPABASE_URL: ${{ secrets.SUPABASE_URL }}

|

SUPABASE_URL: ${{ secrets.SUPABASE_URL }}

|

||||||

TEST_API_KEY: ${{ secrets.TEST_API_KEY }}

|

TEST_API_KEY: ${{ secrets.TEST_API_KEY }}

|

||||||

|

PYPI_USERNAME: ${{ secrets.PYPI_USERNAME }}

|

||||||

|

PYPI_PASSWORD: ${{ secrets.PYPI_PASSWORD }}

|

||||||

|

NPM_TOKEN: ${{ secrets.NPM_TOKEN }}

|

||||||

|

CRATES_IO_TOKEN: ${{ secrets.CRATES_IO_TOKEN }}

|

||||||

SENTRY_AUTH_TOKEN: ${{ secrets.SENTRY_AUTH_TOKEN }}

|

SENTRY_AUTH_TOKEN: ${{ secrets.SENTRY_AUTH_TOKEN }}

|

||||||

|

USE_DB_AUTHENTICATION: ${{ secrets.USE_DB_AUTHENTICATION }}

|

||||||

|

ENV: ${{ secrets.ENV }}

|

||||||

|

|

||||||

jobs:

|

jobs:

|

||||||

deploy:

|

deploy:

|

||||||

|

|

|

||||||

304

.github/workflows/fly.yml

vendored

304

.github/workflows/fly.yml

vendored

|

|

@ -29,9 +29,10 @@ env:

|

||||||

CRATES_IO_TOKEN: ${{ secrets.CRATES_IO_TOKEN }}

|

CRATES_IO_TOKEN: ${{ secrets.CRATES_IO_TOKEN }}

|

||||||

SENTRY_AUTH_TOKEN: ${{ secrets.SENTRY_AUTH_TOKEN }}

|

SENTRY_AUTH_TOKEN: ${{ secrets.SENTRY_AUTH_TOKEN }}

|

||||||

USE_DB_AUTHENTICATION: ${{ secrets.USE_DB_AUTHENTICATION }}

|

USE_DB_AUTHENTICATION: ${{ secrets.USE_DB_AUTHENTICATION }}

|

||||||

|

ENV: ${{ secrets.ENV }}

|

||||||

|

|

||||||

jobs:

|

jobs:

|

||||||

pre-deploy-e2e-tests:

|

pre-deploy:

|

||||||

name: Pre-deploy checks

|

name: Pre-deploy checks

|

||||||

runs-on: ubuntu-latest

|

runs-on: ubuntu-latest

|

||||||

services:

|

services:

|

||||||

|

|

@ -58,197 +59,15 @@ jobs:

|

||||||

run: npm run workers &

|

run: npm run workers &

|

||||||

working-directory: ./apps/api

|

working-directory: ./apps/api

|

||||||

id: start_workers

|

id: start_workers

|

||||||

- name: Wait for the application to be ready

|

|

||||||

run: |

|

|

||||||

sleep 10

|

|

||||||

- name: Run E2E tests

|

- name: Run E2E tests

|

||||||

run: |

|

run: |

|

||||||

npm run test:prod

|

npm run test:prod

|

||||||

working-directory: ./apps/api

|

working-directory: ./apps/api

|

||||||

|

|

||||||

pre-deploy-test-suite:

|

|

||||||

name: Test Suite

|

|

||||||

needs: pre-deploy-e2e-tests

|

|

||||||

runs-on: ubuntu-latest

|

|

||||||

services:

|

|

||||||

redis:

|

|

||||||

image: redis

|

|

||||||

ports:

|

|

||||||

- 6379:6379

|

|

||||||

steps:

|

|

||||||

- uses: actions/checkout@v3

|

|

||||||

- name: Set up Node.js

|

|

||||||

uses: actions/setup-node@v3

|

|

||||||

with:

|

|

||||||

node-version: "20"

|

|

||||||

- name: Install pnpm

|

|

||||||

run: npm install -g pnpm

|

|

||||||

- name: Install dependencies

|

|

||||||

run: pnpm install

|

|

||||||

working-directory: ./apps/api

|

|

||||||

- name: Start the application

|

|

||||||

run: npm start &

|

|

||||||

working-directory: ./apps/api

|

|

||||||

id: start_app

|

|

||||||

- name: Start workers

|

|

||||||

run: npm run workers &

|

|

||||||

working-directory: ./apps/api

|

|

||||||

id: start_workers

|

|

||||||

- name: Install dependencies

|

|

||||||

run: pnpm install

|

|

||||||

working-directory: ./apps/test-suite

|

|

||||||

- name: Run E2E tests

|

|

||||||

run: |

|

|

||||||

npm run test:suite

|

|

||||||

working-directory: ./apps/test-suite

|

|

||||||

|

|

||||||

python-sdk-tests:

|

|

||||||

name: Python SDK Tests

|

|

||||||

needs: pre-deploy-e2e-tests

|

|

||||||

runs-on: ubuntu-latest

|

|

||||||

services:

|

|

||||||

redis:

|

|

||||||

image: redis

|

|

||||||

ports:

|

|

||||||

- 6379:6379

|

|

||||||

steps:

|

|

||||||

- uses: actions/checkout@v3

|

|

||||||

- name: Set up Python

|

|

||||||

uses: actions/setup-python@v4

|

|

||||||

with:

|

|

||||||

python-version: '3.x'

|

|

||||||

- name: Install pnpm

|

|

||||||

run: npm install -g pnpm

|

|

||||||

- name: Install dependencies

|

|

||||||

run: pnpm install

|

|

||||||

working-directory: ./apps/api

|

|

||||||

- name: Start the application

|

|

||||||

run: npm start &

|

|

||||||

working-directory: ./apps/api

|

|

||||||

id: start_app

|

|

||||||

- name: Start workers

|

|

||||||

run: npm run workers &

|

|

||||||

working-directory: ./apps/api

|

|

||||||

id: start_workers

|

|

||||||

- name: Install Python dependencies

|

|

||||||

run: |

|

|

||||||

python -m pip install --upgrade pip

|

|

||||||

pip install -r requirements.txt

|

|

||||||

working-directory: ./apps/python-sdk

|

|

||||||

- name: Run E2E tests for Python SDK

|

|

||||||

run: |

|

|

||||||

pytest firecrawl/__tests__/v1/e2e_withAuth/test.py

|

|

||||||

working-directory: ./apps/python-sdk

|

|

||||||

|

|

||||||

js-sdk-tests:

|

|

||||||

name: JavaScript SDK Tests

|

|

||||||

needs: pre-deploy-e2e-tests

|

|

||||||

runs-on: ubuntu-latest

|

|

||||||

services:

|

|

||||||

redis:

|

|

||||||

image: redis

|

|

||||||

ports:

|

|

||||||

- 6379:6379

|

|

||||||

steps:

|

|

||||||

- uses: actions/checkout@v3

|

|

||||||

- name: Set up Node.js

|

|

||||||

uses: actions/setup-node@v3

|

|

||||||

with:

|

|

||||||

node-version: "20"

|

|

||||||

- name: Install pnpm

|

|

||||||

run: npm install -g pnpm

|

|

||||||

- name: Install dependencies

|

|

||||||

run: pnpm install

|

|

||||||

working-directory: ./apps/api

|

|

||||||

- name: Start the application

|

|

||||||

run: npm start &

|

|

||||||

working-directory: ./apps/api

|

|

||||||

id: start_app

|

|

||||||

- name: Start workers

|

|

||||||

run: npm run workers &

|

|

||||||

working-directory: ./apps/api

|

|

||||||

id: start_workers

|

|

||||||

- name: Install dependencies for JavaScript SDK

|

|

||||||

run: pnpm install

|

|

||||||

working-directory: ./apps/js-sdk/firecrawl

|

|

||||||

- name: Run E2E tests for JavaScript SDK

|

|

||||||

run: npm run test

|

|

||||||

working-directory: ./apps/js-sdk/firecrawl

|

|

||||||

|

|

||||||

go-sdk-tests:

|

|

||||||

name: Go SDK Tests

|

|

||||||

needs: pre-deploy-e2e-tests

|

|

||||||

runs-on: ubuntu-latest

|

|

||||||

services:

|

|

||||||

redis:

|

|

||||||

image: redis

|

|

||||||

ports:

|

|

||||||

- 6379:6379

|

|

||||||

steps:

|

|

||||||

- uses: actions/checkout@v3

|

|

||||||

- name: Set up Go

|

|

||||||

uses: actions/setup-go@v5

|

|

||||||

with:

|

|

||||||

go-version-file: "go.mod"

|

|

||||||

- name: Install pnpm

|

|

||||||

run: npm install -g pnpm

|

|

||||||

- name: Install dependencies

|

|

||||||

run: pnpm install

|

|

||||||

working-directory: ./apps/api

|

|

||||||

- name: Start the application

|

|

||||||

run: npm start &

|

|

||||||

working-directory: ./apps/api

|

|

||||||

id: start_app

|

|

||||||

- name: Start workers

|

|

||||||

run: npm run workers &

|

|

||||||

working-directory: ./apps/api

|

|

||||||

id: start_workers

|

|

||||||

- name: Install dependencies for Go SDK

|

|

||||||

run: go mod tidy

|

|

||||||

working-directory: ./apps/go-sdk

|

|

||||||

- name: Run tests for Go SDK

|

|

||||||

run: go test -v ./... -timeout 180s

|

|

||||||

working-directory: ./apps/go-sdk/firecrawl

|

|

||||||

|

|

||||||

rust-sdk-tests:

|

|

||||||

name: Rust SDK Tests

|

|

||||||

needs: pre-deploy-e2e-tests

|

|

||||||

runs-on: ubuntu-latest

|

|

||||||

services:

|

|

||||||

redis:

|

|

||||||

image: redis

|

|

||||||

ports:

|

|

||||||

- 6379:6379

|

|

||||||

steps:

|

|

||||||

- name: Checkout repository

|

|

||||||

uses: actions/checkout@v3

|

|

||||||

- name: Install pnpm

|

|

||||||

run: npm install -g pnpm

|

|

||||||

- name: Install dependencies for API

|

|

||||||

run: pnpm install

|

|

||||||

working-directory: ./apps/api

|

|

||||||

- name: Start the application

|

|

||||||

run: npm start &

|

|

||||||

working-directory: ./apps/api

|

|

||||||

id: start_app

|

|

||||||

- name: Start workers

|

|

||||||

run: npm run workers &

|

|

||||||

working-directory: ./apps/api

|

|

||||||

id: start_workers

|

|

||||||

- name: Set up Rust

|

|

||||||

uses: actions/setup-rust@v1

|

|

||||||

with:

|

|

||||||

rust-version: stable

|

|

||||||

- name: Try the lib build

|

|

||||||

working-directory: ./apps/rust-sdk

|

|

||||||

run: cargo build

|

|

||||||

- name: Run E2E tests for Rust SDK

|

|

||||||

run: cargo test --test e2e_with_auth

|

|

||||||

|

|

||||||

deploy:

|

deploy:

|

||||||

name: Deploy app

|

name: Deploy app

|

||||||

|

needs: pre-deploy

|

||||||

runs-on: ubuntu-latest

|

runs-on: ubuntu-latest

|

||||||

needs: [pre-deploy-test-suite, python-sdk-tests, js-sdk-tests, rust-sdk-tests]

|

|

||||||

steps:

|

steps:

|

||||||

- uses: actions/checkout@v3

|

- uses: actions/checkout@v3

|

||||||

- uses: superfly/flyctl-actions/setup-flyctl@master

|

- uses: superfly/flyctl-actions/setup-flyctl@master

|

||||||

|

|

@ -259,119 +78,4 @@ jobs:

|

||||||

BULL_AUTH_KEY: ${{ secrets.BULL_AUTH_KEY }}

|

BULL_AUTH_KEY: ${{ secrets.BULL_AUTH_KEY }}

|

||||||

SENTRY_AUTH_TOKEN: ${{ secrets.SENTRY_AUTH_TOKEN }}

|

SENTRY_AUTH_TOKEN: ${{ secrets.SENTRY_AUTH_TOKEN }}

|

||||||

|

|

||||||

build-and-publish-python-sdk:

|

|

||||||

name: Build and publish Python SDK

|

|

||||||

runs-on: ubuntu-latest

|

|

||||||

needs: deploy

|

|

||||||

|

|

||||||

steps:

|

|

||||||

- name: Checkout repository

|

|

||||||

uses: actions/checkout@v3

|

|

||||||

|

|

||||||

- name: Set up Python

|

|

||||||

uses: actions/setup-python@v4

|

|

||||||

with:

|

|

||||||

python-version: '3.x'

|

|

||||||

|

|

||||||

- name: Install dependencies

|

|

||||||

run: |

|

|

||||||

python -m pip install --upgrade pip

|

|

||||||

pip install setuptools wheel twine build requests packaging

|

|

||||||

|

|

||||||

- name: Run version check script

|

|

||||||

id: version_check_script

|

|

||||||

run: |

|

|

||||||

PYTHON_SDK_VERSION_INCREMENTED=$(python .github/scripts/check_version_has_incremented.py python ./apps/python-sdk/firecrawl firecrawl-py)

|

|

||||||

echo "PYTHON_SDK_VERSION_INCREMENTED=$PYTHON_SDK_VERSION_INCREMENTED" >> $GITHUB_ENV

|

|

||||||

|

|

||||||

- name: Build the package

|

|

||||||

if: ${{ env.PYTHON_SDK_VERSION_INCREMENTED == 'true' }}

|

|

||||||

run: |

|

|

||||||

python -m build

|

|

||||||

working-directory: ./apps/python-sdk

|

|

||||||

|

|

||||||

- name: Publish to PyPI

|

|

||||||

if: ${{ env.PYTHON_SDK_VERSION_INCREMENTED == 'true' }}

|

|

||||||

env:

|

|

||||||

TWINE_USERNAME: ${{ secrets.PYPI_USERNAME }}

|

|

||||||

TWINE_PASSWORD: ${{ secrets.PYPI_PASSWORD }}

|

|

||||||

run: |

|

|

||||||

twine upload dist/*

|

|

||||||

working-directory: ./apps/python-sdk

|

|

||||||

|

|

||||||

build-and-publish-js-sdk:

|

|

||||||

name: Build and publish JavaScript SDK

|

|

||||||

runs-on: ubuntu-latest

|

|

||||||

needs: deploy

|

|

||||||

|

|

||||||

steps:

|

|

||||||

- uses: actions/checkout@v3

|

|

||||||

- name: Set up Node.js

|

|

||||||

uses: actions/setup-node@v3

|

|

||||||

with:

|

|

||||||

node-version: '20'

|

|

||||||

registry-url: 'https://registry.npmjs.org/'

|

|

||||||

scope: '@mendable'

|

|

||||||

always-auth: true

|

|

||||||

|

|

||||||

- name: Install pnpm

|

|

||||||

run: npm install -g pnpm

|

|

||||||

|

|

||||||

- name: Install python for running version check script

|

|

||||||

run: |

|

|

||||||

python -m pip install --upgrade pip

|

|

||||||

pip install setuptools wheel requests packaging

|

|

||||||

|

|

||||||

- name: Install dependencies for JavaScript SDK

|

|

||||||

run: pnpm install

|

|

||||||

working-directory: ./apps/js-sdk/firecrawl

|

|

||||||

|

|

||||||

- name: Run version check script

|

|

||||||

id: version_check_script

|

|

||||||

run: |

|

|

||||||

VERSION_INCREMENTED=$(python .github/scripts/check_version_has_incremented.py js ./apps/js-sdk/firecrawl @mendable/firecrawl-js)

|

|

||||||

echo "VERSION_INCREMENTED=$VERSION_INCREMENTED" >> $GITHUB_ENV

|

|

||||||

|

|

||||||

- name: Build and publish to npm

|

|

||||||

if: ${{ env.VERSION_INCREMENTED == 'true' }}

|

|

||||||

env:

|

|

||||||

NODE_AUTH_TOKEN: ${{ secrets.NPM_TOKEN }}

|

|

||||||

run: |

|

|

||||||

npm run build-and-publish

|

|

||||||

working-directory: ./apps/js-sdk/firecrawl

|

|

||||||

build-and-publish-rust-sdk:

|

|

||||||

name: Build and publish Rust SDK

|

|

||||||

runs-on: ubuntu-latest

|

|

||||||

needs: deploy

|

|

||||||

|

|

||||||

steps:

|

|

||||||

- name: Checkout repository

|

|

||||||

uses: actions/checkout@v3

|

|

||||||

|

|

||||||

- name: Set up Rust

|

|

||||||

uses: actions-rs/toolchain@v1

|

|

||||||

with:

|

|

||||||

toolchain: stable

|

|

||||||

default: true

|

|

||||||

profile: minimal

|

|

||||||

|

|

||||||

- name: Install dependencies

|

|

||||||

run: cargo build --release

|

|

||||||

|

|

||||||

- name: Run version check script

|

|

||||||

id: version_check_script

|

|

||||||

run: |

|

|

||||||

VERSION_INCREMENTED=$(cargo search --limit 1 my_crate_name | grep my_crate_name)

|

|

||||||

echo "VERSION_INCREMENTED=$VERSION_INCREMENTED" >> $GITHUB_ENV

|

|

||||||

|

|

||||||

- name: Build the package

|

|

||||||

if: ${{ env.VERSION_INCREMENTED == 'true' }}

|

|

||||||

run: cargo package

|

|

||||||

working-directory: ./apps/rust-sdk

|

|

||||||

|

|

||||||

- name: Publish to crates.io

|

|

||||||

if: ${{ env.VERSION_INCREMENTED == 'true' }}

|

|

||||||

env:

|

|

||||||

CARGO_REG_TOKEN: ${{ secrets.CRATES_IO_TOKEN }}

|

|

||||||

run: cargo publish

|

|

||||||

working-directory: ./apps/rust-sdk

|

|

||||||

5

.gitignore

vendored

5

.gitignore

vendored

|

|

@ -19,5 +19,10 @@ apps/test-suite/load-test-results/test-run-report.json

|

||||||

apps/playwright-service-ts/node_modules/

|

apps/playwright-service-ts/node_modules/

|

||||||

apps/playwright-service-ts/package-lock.json

|

apps/playwright-service-ts/package-lock.json

|

||||||

|

|

||||||

|

|

||||||

|

/examples/o1_web_crawler/venv

|

||||||

*.pyc

|

*.pyc

|

||||||

.rdb

|

.rdb

|

||||||

|

|

||||||

|

apps/js-sdk/firecrawl/dist

|

||||||

|

|

||||||

|

|

|

||||||

|

|

@ -103,7 +103,7 @@ This should return the response Hello, world!

|

||||||

If you’d like to test the crawl endpoint, you can run this

|

If you’d like to test the crawl endpoint, you can run this

|

||||||

|

|

||||||

```curl

|

```curl

|

||||||

curl -X POST http://localhost:3002/v0/crawl \

|

curl -X POST http://localhost:3002/v1/crawl \

|

||||||

-H 'Content-Type: application/json' \

|

-H 'Content-Type: application/json' \

|

||||||

-d '{

|

-d '{

|

||||||

"url": "https://mendable.ai"

|

"url": "https://mendable.ai"

|

||||||

|

|

|

||||||

39

README.md

39

README.md

|

|

@ -34,9 +34,9 @@

|

||||||

|

|

||||||

# 🔥 Firecrawl

|

# 🔥 Firecrawl

|

||||||

|

|

||||||

Crawl and convert any website into LLM-ready markdown or structured data. Built by [Mendable.ai](https://mendable.ai?ref=gfirecrawl) and the Firecrawl community. Includes powerful scraping, crawling and data extraction capabilities.

|

Empower your AI apps with clean data from any website. Featuring advanced scraping, crawling, and data extraction capabilities.

|

||||||

|

|

||||||

_This repository is in its early development stages. We are still merging custom modules in the mono repo. It's not completely yet ready for full self-host deployment, but you can already run it locally._

|

_This repository is in development, and we’re still integrating custom modules into the mono repo. It's not fully ready for self-hosted deployment yet, but you can run it locally._

|

||||||

|

|

||||||

## What is Firecrawl?

|

## What is Firecrawl?

|

||||||

|

|

||||||

|

|

@ -52,9 +52,12 @@ _Pst. hey, you, join our stargazers :)_

|

||||||

|

|

||||||

We provide an easy to use API with our hosted version. You can find the playground and documentation [here](https://firecrawl.dev/playground). You can also self host the backend if you'd like.

|

We provide an easy to use API with our hosted version. You can find the playground and documentation [here](https://firecrawl.dev/playground). You can also self host the backend if you'd like.

|

||||||

|

|

||||||

- [x] [API](https://firecrawl.dev/playground)

|

Check out the following resources to get started:

|

||||||

- [x] [Python SDK](https://github.com/mendableai/firecrawl/tree/main/apps/python-sdk)

|

- [x] [API](https://docs.firecrawl.dev/api-reference/introduction)

|

||||||

- [x] [Node SDK](https://github.com/mendableai/firecrawl/tree/main/apps/js-sdk)

|

- [x] [Python SDK](https://docs.firecrawl.dev/sdks/python)

|

||||||

|

- [x] [Node SDK](https://docs.firecrawl.dev/sdks/node)

|

||||||

|

- [x] [Go SDK](https://docs.firecrawl.dev/sdks/go)

|

||||||

|

- [x] [Rust SDK](https://docs.firecrawl.dev/sdks/rust)

|

||||||

- [x] [Langchain Integration 🦜🔗](https://python.langchain.com/docs/integrations/document_loaders/firecrawl/)

|

- [x] [Langchain Integration 🦜🔗](https://python.langchain.com/docs/integrations/document_loaders/firecrawl/)

|

||||||

- [x] [Langchain JS Integration 🦜🔗](https://js.langchain.com/docs/integrations/document_loaders/web_loaders/firecrawl)

|

- [x] [Langchain JS Integration 🦜🔗](https://js.langchain.com/docs/integrations/document_loaders/web_loaders/firecrawl)

|

||||||

- [x] [Llama Index Integration 🦙](https://docs.llamaindex.ai/en/latest/examples/data_connectors/WebPageDemo/#using-firecrawl-reader)

|

- [x] [Llama Index Integration 🦙](https://docs.llamaindex.ai/en/latest/examples/data_connectors/WebPageDemo/#using-firecrawl-reader)

|

||||||

|

|

@ -62,8 +65,12 @@ We provide an easy to use API with our hosted version. You can find the playgrou

|

||||||

- [x] [Langflow Integration](https://docs.langflow.org/)

|

- [x] [Langflow Integration](https://docs.langflow.org/)

|

||||||

- [x] [Crew.ai Integration](https://docs.crewai.com/)

|

- [x] [Crew.ai Integration](https://docs.crewai.com/)

|

||||||

- [x] [Flowise AI Integration](https://docs.flowiseai.com/integrations/langchain/document-loaders/firecrawl)

|

- [x] [Flowise AI Integration](https://docs.flowiseai.com/integrations/langchain/document-loaders/firecrawl)

|

||||||

|

- [x] [Composio Integration](https://composio.dev/tools/firecrawl/all)

|

||||||

- [x] [PraisonAI Integration](https://docs.praison.ai/firecrawl/)

|

- [x] [PraisonAI Integration](https://docs.praison.ai/firecrawl/)

|

||||||

- [x] [Zapier Integration](https://zapier.com/apps/firecrawl/integrations)

|

- [x] [Zapier Integration](https://zapier.com/apps/firecrawl/integrations)

|

||||||

|

- [x] [Cargo Integration](https://docs.getcargo.io/integration/firecrawl)

|

||||||

|

- [x] [Pipedream Integration](https://pipedream.com/apps/firecrawl/)

|

||||||

|

- [x] [Pabbly Integration](https://www.pabbly.com/connect/integrations/firecrawl/)

|

||||||

- [ ] Want an SDK or Integration? Let us know by opening an issue.

|

- [ ] Want an SDK or Integration? Let us know by opening an issue.

|

||||||

|

|

||||||

To run locally, refer to guide [here](https://github.com/mendableai/firecrawl/blob/main/SELF_HOST.md).

|

To run locally, refer to guide [here](https://github.com/mendableai/firecrawl/blob/main/SELF_HOST.md).

|

||||||

|

|

@ -402,15 +409,12 @@ class TopArticlesSchema(BaseModel):

|

||||||

top: List[ArticleSchema] = Field(..., max_items=5, description="Top 5 stories")

|

top: List[ArticleSchema] = Field(..., max_items=5, description="Top 5 stories")

|

||||||

|

|

||||||

data = app.scrape_url('https://news.ycombinator.com', {

|

data = app.scrape_url('https://news.ycombinator.com', {

|

||||||

'extractorOptions': {

|

'formats': ['extract'],

|

||||||

'extractionSchema': TopArticlesSchema.model_json_schema(),

|

'extract': {

|

||||||

'mode': 'llm-extraction'

|

'schema': TopArticlesSchema.model_json_schema()

|

||||||

},

|

|

||||||

'pageOptions':{

|

|

||||||

'onlyMainContent': True

|

|

||||||

}

|

}

|

||||||

})

|

})

|

||||||

print(data["llm_extraction"])

|

print(data["extract"])

|

||||||

```

|

```

|

||||||

|

|

||||||

## Using the Node SDK

|

## Using the Node SDK

|

||||||

|

|

@ -490,6 +494,17 @@ const scrapeResult = await app.scrapeUrl("https://news.ycombinator.com", {

|

||||||

console.log(scrapeResult.data["llm_extraction"]);

|

console.log(scrapeResult.data["llm_extraction"]);

|

||||||

```

|

```

|

||||||

|

|

||||||

|

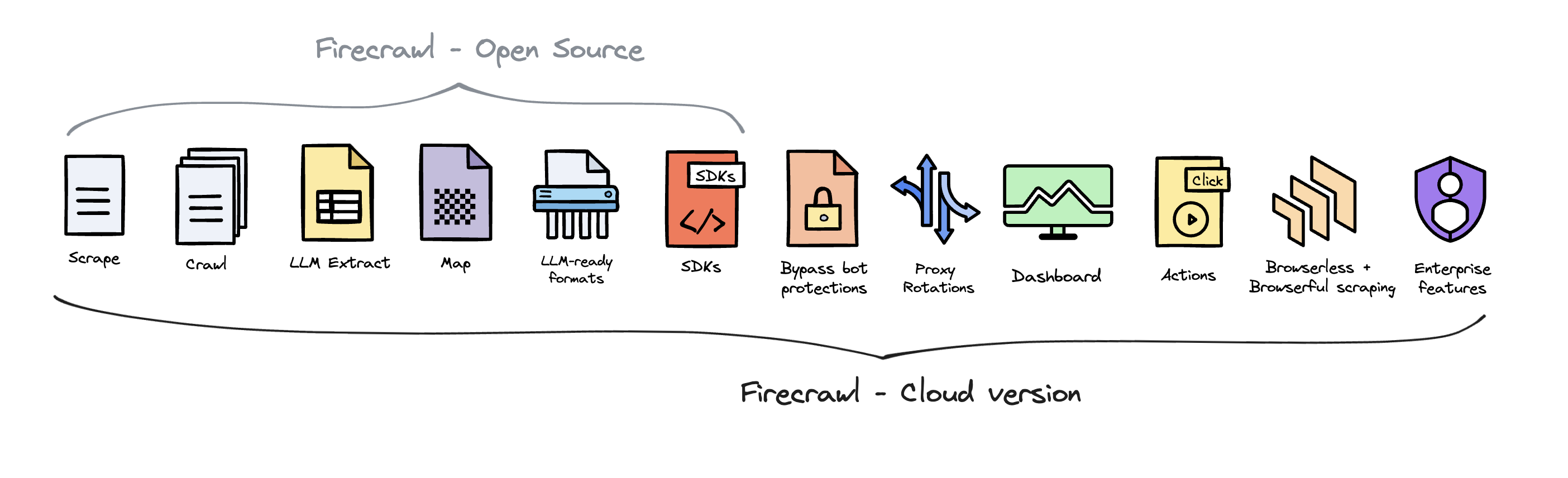

## Open Source vs Cloud Offering

|

||||||

|

|

||||||

|

Firecrawl is open source available under the AGPL-3.0 license.

|

||||||

|

|

||||||

|

To deliver the best possible product, we offer a hosted version of Firecrawl alongside our open-source offering. The cloud solution allows us to continuously innovate and maintain a high-quality, sustainable service for all users.

|

||||||

|

|

||||||

|

Firecrawl Cloud is available at [firecrawl.dev](https://firecrawl.dev) and offers a range of features that are not available in the open source version:

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

## Contributing

|

## Contributing

|

||||||

|

|

||||||

We love contributions! Please read our [contributing guide](CONTRIBUTING.md) before submitting a pull request.

|

We love contributions! Please read our [contributing guide](CONTRIBUTING.md) before submitting a pull request.

|

||||||

|

|

|

||||||

|

|

@ -106,7 +106,7 @@ You should be able to see the Bull Queue Manager UI on `http://localhost:3002/ad

|

||||||

If you’d like to test the crawl endpoint, you can run this:

|

If you’d like to test the crawl endpoint, you can run this:

|

||||||

|

|

||||||

```bash

|

```bash

|

||||||

curl -X POST http://localhost:3002/v0/crawl \

|

curl -X POST http://localhost:3002/v1/crawl \

|

||||||

-H 'Content-Type: application/json' \

|

-H 'Content-Type: application/json' \

|

||||||

-d '{

|

-d '{

|

||||||

"url": "https://mendable.ai"

|

"url": "https://mendable.ai"

|

||||||

|

|

|

||||||

|

|

@ -17,8 +17,15 @@ RUN pnpm install

|

||||||

RUN --mount=type=secret,id=SENTRY_AUTH_TOKEN \

|

RUN --mount=type=secret,id=SENTRY_AUTH_TOKEN \

|

||||||

bash -c 'export SENTRY_AUTH_TOKEN="$(cat /run/secrets/SENTRY_AUTH_TOKEN)"; if [ -z $SENTRY_AUTH_TOKEN ]; then pnpm run build:nosentry; else pnpm run build; fi'

|

bash -c 'export SENTRY_AUTH_TOKEN="$(cat /run/secrets/SENTRY_AUTH_TOKEN)"; if [ -z $SENTRY_AUTH_TOKEN ]; then pnpm run build:nosentry; else pnpm run build; fi'

|

||||||

|

|

||||||

# Install packages needed for deployment

|

# Install Go

|

||||||

|

FROM golang:1.19 AS go-base

|

||||||

|

COPY src/lib/go-html-to-md /app/src/lib/go-html-to-md

|

||||||

|

|

||||||

|

# Install Go dependencies and build parser lib

|

||||||

|

RUN cd /app/src/lib/go-html-to-md && \

|

||||||

|

go mod tidy && \

|

||||||

|

go build -o html-to-markdown.so -buildmode=c-shared html-to-markdown.go && \

|

||||||

|

chmod +x html-to-markdown.so

|

||||||

|

|

||||||

FROM base

|

FROM base

|

||||||

RUN apt-get update -qq && \

|

RUN apt-get update -qq && \

|

||||||

|

|

@ -26,10 +33,8 @@ RUN apt-get update -qq && \

|

||||||

rm -rf /var/lib/apt/lists /var/cache/apt/archives

|

rm -rf /var/lib/apt/lists /var/cache/apt/archives

|

||||||

COPY --from=prod-deps /app/node_modules /app/node_modules

|

COPY --from=prod-deps /app/node_modules /app/node_modules

|

||||||

COPY --from=build /app /app

|

COPY --from=build /app /app

|

||||||

|

COPY --from=go-base /app/src/lib/go-html-to-md/html-to-markdown.so /app/dist/src/lib/go-html-to-md/html-to-markdown.so

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

# Start the server by default, this can be overwritten at runtime

|

# Start the server by default, this can be overwritten at runtime

|

||||||

EXPOSE 8080

|

EXPOSE 8080

|

||||||

ENV PUPPETEER_EXECUTABLE_PATH="/usr/bin/chromium"

|

ENV PUPPETEER_EXECUTABLE_PATH="/usr/bin/chromium"

|

||||||

|

|

@ -86,6 +86,7 @@

|

||||||

"joplin-turndown-plugin-gfm": "^1.0.12",

|

"joplin-turndown-plugin-gfm": "^1.0.12",

|

||||||

"json-schema-to-zod": "^2.3.0",

|

"json-schema-to-zod": "^2.3.0",

|

||||||

"keyword-extractor": "^0.0.28",

|

"keyword-extractor": "^0.0.28",

|

||||||

|

"koffi": "^2.9.0",

|

||||||

"langchain": "^0.2.8",

|

"langchain": "^0.2.8",

|

||||||

"languagedetect": "^2.0.0",

|

"languagedetect": "^2.0.0",

|

||||||

"logsnag": "^1.0.0",

|

"logsnag": "^1.0.0",

|

||||||

|

|

|

||||||

|

|

@ -122,6 +122,9 @@ importers:

|

||||||

keyword-extractor:

|

keyword-extractor:

|

||||||

specifier: ^0.0.28

|

specifier: ^0.0.28

|

||||||

version: 0.0.28

|

version: 0.0.28

|

||||||

|

koffi:

|

||||||

|

specifier: ^2.9.0

|

||||||

|

version: 2.9.0

|

||||||

langchain:

|

langchain:

|

||||||

specifier: ^0.2.8

|

specifier: ^0.2.8

|

||||||

version: 0.2.8(@supabase/supabase-js@2.44.2)(axios@1.7.2)(cheerio@1.0.0-rc.12)(handlebars@4.7.8)(html-to-text@9.0.5)(ioredis@5.4.1)(mammoth@1.7.2)(mongodb@6.6.2(socks@2.8.3))(openai@4.57.0(zod@3.23.8))(pdf-parse@1.1.1)(puppeteer@22.12.1(typescript@5.4.5))(redis@4.6.14)(ws@8.18.0)

|

version: 0.2.8(@supabase/supabase-js@2.44.2)(axios@1.7.2)(cheerio@1.0.0-rc.12)(handlebars@4.7.8)(html-to-text@9.0.5)(ioredis@5.4.1)(mammoth@1.7.2)(mongodb@6.6.2(socks@2.8.3))(openai@4.57.0(zod@3.23.8))(pdf-parse@1.1.1)(puppeteer@22.12.1(typescript@5.4.5))(redis@4.6.14)(ws@8.18.0)

|

||||||

|

|

@ -3170,6 +3173,9 @@ packages:

|

||||||

resolution: {integrity: sha512-eTIzlVOSUR+JxdDFepEYcBMtZ9Qqdef+rnzWdRZuMbOywu5tO2w2N7rqjoANZ5k9vywhL6Br1VRjUIgTQx4E8w==}

|

resolution: {integrity: sha512-eTIzlVOSUR+JxdDFepEYcBMtZ9Qqdef+rnzWdRZuMbOywu5tO2w2N7rqjoANZ5k9vywhL6Br1VRjUIgTQx4E8w==}

|

||||||

engines: {node: '>=6'}

|

engines: {node: '>=6'}

|

||||||

|

|

||||||

|

koffi@2.9.0:

|

||||||

|

resolution: {integrity: sha512-KCsuJ2gM58n6bNdR2Z7gqsh/3TchxxQFbVgax2/UvAjRTgwNSYAJDx9E3jrkBP4jEDHWRCfE47Y2OG+/fiSvEw==}

|

||||||

|

|

||||||

langchain@0.2.8:

|

langchain@0.2.8:

|

||||||

resolution: {integrity: sha512-kb2IOMA71xH8e6EXFg0l4S+QSMC/c796pj1+7mPBkR91HHwoyHZhFRrBaZv4tV+Td+Ba91J2uEDBmySklZLpNQ==}

|

resolution: {integrity: sha512-kb2IOMA71xH8e6EXFg0l4S+QSMC/c796pj1+7mPBkR91HHwoyHZhFRrBaZv4tV+Td+Ba91J2uEDBmySklZLpNQ==}

|

||||||

engines: {node: '>=18'}

|

engines: {node: '>=18'}

|

||||||

|

|

@ -8492,6 +8498,8 @@ snapshots:

|

||||||

|

|

||||||

kleur@3.0.3: {}

|

kleur@3.0.3: {}

|

||||||

|

|

||||||

|

koffi@2.9.0: {}

|

||||||

|

|

||||||

langchain@0.2.8(@supabase/supabase-js@2.44.2)(axios@1.7.2)(cheerio@1.0.0-rc.12)(handlebars@4.7.8)(html-to-text@9.0.5)(ioredis@5.4.1)(mammoth@1.7.2)(mongodb@6.6.2(socks@2.8.3))(openai@4.57.0(zod@3.23.8))(pdf-parse@1.1.1)(puppeteer@22.12.1(typescript@5.4.5))(redis@4.6.14)(ws@8.18.0):

|

langchain@0.2.8(@supabase/supabase-js@2.44.2)(axios@1.7.2)(cheerio@1.0.0-rc.12)(handlebars@4.7.8)(html-to-text@9.0.5)(ioredis@5.4.1)(mammoth@1.7.2)(mongodb@6.6.2(socks@2.8.3))(openai@4.57.0(zod@3.23.8))(pdf-parse@1.1.1)(puppeteer@22.12.1(typescript@5.4.5))(redis@4.6.14)(ws@8.18.0):

|

||||||

dependencies:

|

dependencies:

|

||||||

'@langchain/core': 0.2.12(langchain@0.2.8(@supabase/supabase-js@2.44.2)(axios@1.7.2)(cheerio@1.0.0-rc.12)(handlebars@4.7.8)(html-to-text@9.0.5)(ioredis@5.4.1)(mammoth@1.7.2)(mongodb@6.6.2(socks@2.8.3))(openai@4.57.0(zod@3.23.8))(pdf-parse@1.1.1)(puppeteer@22.12.1(typescript@5.4.5))(redis@4.6.14)(ws@8.18.0))(openai@4.57.0(zod@3.23.8))

|

'@langchain/core': 0.2.12(langchain@0.2.8(@supabase/supabase-js@2.44.2)(axios@1.7.2)(cheerio@1.0.0-rc.12)(handlebars@4.7.8)(html-to-text@9.0.5)(ioredis@5.4.1)(mammoth@1.7.2)(mongodb@6.6.2(socks@2.8.3))(openai@4.57.0(zod@3.23.8))(pdf-parse@1.1.1)(puppeteer@22.12.1(typescript@5.4.5))(redis@4.6.14)(ws@8.18.0))(openai@4.57.0(zod@3.23.8))

|

||||||

|

|

|

||||||

|

|

@ -1,11 +1,11 @@

|

||||||

import request from "supertest";

|

import request from "supertest";

|

||||||

import dotenv from "dotenv";

|

import { configDotenv } from "dotenv";

|

||||||

import {

|

import {

|

||||||

ScrapeRequest,

|

ScrapeRequest,

|

||||||

ScrapeResponseRequestTest,

|

ScrapeResponseRequestTest,

|

||||||

} from "../../controllers/v1/types";

|

} from "../../controllers/v1/types";

|

||||||

|

|

||||||

dotenv.config();

|

configDotenv();

|

||||||

const TEST_URL = "http://127.0.0.1:3002";

|

const TEST_URL = "http://127.0.0.1:3002";

|

||||||

|

|

||||||

describe("E2E Tests for v1 API Routes", () => {

|

describe("E2E Tests for v1 API Routes", () => {

|

||||||

|

|

@ -22,6 +22,13 @@ describe("E2E Tests for v1 API Routes", () => {

|

||||||

const response: ScrapeResponseRequestTest = await request(TEST_URL).get(

|

const response: ScrapeResponseRequestTest = await request(TEST_URL).get(

|

||||||

"/is-production"

|

"/is-production"

|

||||||

);

|

);

|

||||||

|

|

||||||

|

console.log('process.env.USE_DB_AUTHENTICATION', process.env.USE_DB_AUTHENTICATION);

|

||||||

|

console.log('?', process.env.USE_DB_AUTHENTICATION === 'true');

|

||||||

|

const useDbAuthentication = process.env.USE_DB_AUTHENTICATION === 'true';

|

||||||

|

console.log('!!useDbAuthentication', !!useDbAuthentication);

|

||||||

|

console.log('!useDbAuthentication', !useDbAuthentication);

|

||||||

|

|

||||||

expect(response.statusCode).toBe(200);

|

expect(response.statusCode).toBe(200);

|

||||||

expect(response.body).toHaveProperty("isProduction");

|

expect(response.body).toHaveProperty("isProduction");

|

||||||

});

|

});

|

||||||

|

|

|

||||||

|

|

@ -5,6 +5,8 @@ import { supabase_service } from "../../../src/services/supabase";

|

||||||

import { Logger } from "../../../src/lib/logger";

|

import { Logger } from "../../../src/lib/logger";

|

||||||

import { getCrawl, saveCrawl } from "../../../src/lib/crawl-redis";

|

import { getCrawl, saveCrawl } from "../../../src/lib/crawl-redis";

|

||||||

import * as Sentry from "@sentry/node";

|

import * as Sentry from "@sentry/node";

|

||||||

|

import { configDotenv } from "dotenv";

|

||||||

|

configDotenv();

|

||||||

|

|

||||||

export async function crawlCancelController(req: Request, res: Response) {

|

export async function crawlCancelController(req: Request, res: Response) {

|

||||||

try {

|

try {

|

||||||

|

|

|

||||||

|

|

@ -4,14 +4,16 @@ import { RateLimiterMode } from "../../../src/types";

|

||||||

import { getScrapeQueue } from "../../../src/services/queue-service";

|

import { getScrapeQueue } from "../../../src/services/queue-service";

|

||||||

import { Logger } from "../../../src/lib/logger";

|

import { Logger } from "../../../src/lib/logger";

|

||||||

import { getCrawl, getCrawlJobs } from "../../../src/lib/crawl-redis";

|

import { getCrawl, getCrawlJobs } from "../../../src/lib/crawl-redis";

|

||||||

import { supabaseGetJobsById } from "../../../src/lib/supabase-jobs";

|

import { supabaseGetJobsByCrawlId } from "../../../src/lib/supabase-jobs";

|

||||||

import * as Sentry from "@sentry/node";

|

import * as Sentry from "@sentry/node";

|

||||||

|

import { configDotenv } from "dotenv";

|

||||||

|

configDotenv();

|

||||||

|

|

||||||

export async function getJobs(ids: string[]) {

|

export async function getJobs(crawlId: string, ids: string[]) {

|

||||||

const jobs = (await Promise.all(ids.map(x => getScrapeQueue().getJob(x)))).filter(x => x);

|

const jobs = (await Promise.all(ids.map(x => getScrapeQueue().getJob(x)))).filter(x => x);

|

||||||

|

|

||||||

if (process.env.USE_DB_AUTHENTICATION === "true") {

|

if (process.env.USE_DB_AUTHENTICATION === "true") {

|

||||||

const supabaseData = await supabaseGetJobsById(ids);

|

const supabaseData = await supabaseGetJobsByCrawlId(crawlId);

|

||||||

|

|

||||||

supabaseData.forEach(x => {

|

supabaseData.forEach(x => {

|

||||||

const job = jobs.find(y => y.id === x.job_id);

|

const job = jobs.find(y => y.id === x.job_id);

|

||||||

|

|

@ -50,7 +52,7 @@ export async function crawlStatusController(req: Request, res: Response) {

|

||||||

|

|

||||||

const jobIDs = await getCrawlJobs(req.params.jobId);

|

const jobIDs = await getCrawlJobs(req.params.jobId);

|

||||||

|

|

||||||

const jobs = (await getJobs(jobIDs)).sort((a, b) => a.timestamp - b.timestamp);

|

const jobs = (await getJobs(req.params.jobId, jobIDs)).sort((a, b) => a.timestamp - b.timestamp);

|

||||||

const jobStatuses = await Promise.all(jobs.map(x => x.getState()));

|

const jobStatuses = await Promise.all(jobs.map(x => x.getState()));

|

||||||

const jobStatus = sc.cancelled ? "failed" : jobStatuses.every(x => x === "completed") ? "completed" : jobStatuses.some(x => x === "failed") ? "failed" : "active";

|

const jobStatus = sc.cancelled ? "failed" : jobStatuses.every(x => x === "completed") ? "completed" : jobStatuses.some(x => x === "failed") ? "failed" : "active";

|

||||||

|

|

||||||

|

|

|

||||||

|

|

@ -39,7 +39,7 @@ export async function scrapeHelper(

|

||||||

returnCode: number;

|

returnCode: number;

|

||||||

}> {

|

}> {

|

||||||

const url = req.body.url;

|

const url = req.body.url;

|

||||||

if (!url) {

|

if (typeof url !== "string") {

|

||||||

return { success: false, error: "Url is required", returnCode: 400 };

|

return { success: false, error: "Url is required", returnCode: 400 };

|

||||||

}

|

}

|

||||||

|

|

||||||

|

|

@ -229,7 +229,7 @@ export async function scrapeController(req: Request, res: Response) {

|

||||||

|

|

||||||

if (result.success) {

|

if (result.success) {

|

||||||

let creditsToBeBilled = 1;

|

let creditsToBeBilled = 1;

|

||||||

const creditsPerLLMExtract = 49;

|

const creditsPerLLMExtract = 4;

|

||||||

|

|

||||||

if (extractorOptions.mode.includes("llm-extraction")) {

|

if (extractorOptions.mode.includes("llm-extraction")) {

|

||||||

// creditsToBeBilled = creditsToBeBilled + (creditsPerLLMExtract * filteredDocs.length);

|

// creditsToBeBilled = creditsToBeBilled + (creditsPerLLMExtract * filteredDocs.length);

|

||||||

|

|

|

||||||

|

|

@ -22,7 +22,7 @@ export async function crawlJobStatusPreviewController(req: Request, res: Respons

|

||||||

// }

|

// }

|

||||||

// }

|

// }

|

||||||

|

|

||||||

const jobs = (await getJobs(jobIDs)).sort((a, b) => a.timestamp - b.timestamp);

|

const jobs = (await getJobs(req.params.jobId, jobIDs)).sort((a, b) => a.timestamp - b.timestamp);

|

||||||

const jobStatuses = await Promise.all(jobs.map(x => x.getState()));

|

const jobStatuses = await Promise.all(jobs.map(x => x.getState()));

|

||||||

const jobStatus = sc.cancelled ? "failed" : jobStatuses.every(x => x === "completed") ? "completed" : jobStatuses.some(x => x === "failed") ? "failed" : "active";

|

const jobStatus = sc.cancelled ? "failed" : jobStatuses.every(x => x === "completed") ? "completed" : jobStatuses.some(x => x === "failed") ? "failed" : "active";

|

||||||

|

|

||||||

|

|

|

||||||

|

|

@ -5,6 +5,8 @@ import { supabase_service } from "../../services/supabase";

|

||||||

import { Logger } from "../../lib/logger";

|

import { Logger } from "../../lib/logger";

|

||||||

import { getCrawl, saveCrawl } from "../../lib/crawl-redis";

|

import { getCrawl, saveCrawl } from "../../lib/crawl-redis";

|

||||||

import * as Sentry from "@sentry/node";

|

import * as Sentry from "@sentry/node";

|

||||||

|

import { configDotenv } from "dotenv";

|

||||||

|

configDotenv();

|

||||||

|

|

||||||

export async function crawlCancelController(req: Request, res: Response) {

|

export async function crawlCancelController(req: Request, res: Response) {

|

||||||

try {

|

try {

|

||||||

|

|

|

||||||

|

|

@ -103,6 +103,7 @@ async function crawlStatusWS(ws: WebSocket, req: RequestWithAuth<CrawlStatusPara

|

||||||

send(ws, {

|

send(ws, {

|

||||||

type: "catchup",

|

type: "catchup",

|

||||||

data: {

|

data: {

|

||||||

|

success: true,

|

||||||

status,

|

status,

|

||||||

total: jobIDs.length,

|

total: jobIDs.length,

|

||||||

completed: doneJobIDs.length,

|

completed: doneJobIDs.length,

|

||||||

|

|

|

||||||

|

|

@ -3,6 +3,8 @@ import { CrawlStatusParams, CrawlStatusResponse, ErrorResponse, legacyDocumentCo

|

||||||

import { getCrawl, getCrawlExpiry, getCrawlJobs, getDoneJobsOrdered, getDoneJobsOrderedLength } from "../../lib/crawl-redis";

|

import { getCrawl, getCrawlExpiry, getCrawlJobs, getDoneJobsOrdered, getDoneJobsOrderedLength } from "../../lib/crawl-redis";

|

||||||

import { getScrapeQueue } from "../../services/queue-service";

|

import { getScrapeQueue } from "../../services/queue-service";

|

||||||

import { supabaseGetJobById, supabaseGetJobsById } from "../../lib/supabase-jobs";

|

import { supabaseGetJobById, supabaseGetJobsById } from "../../lib/supabase-jobs";

|

||||||

|

import { configDotenv } from "dotenv";

|

||||||

|

configDotenv();

|

||||||

|

|

||||||

export async function getJob(id: string) {

|

export async function getJob(id: string) {

|

||||||

const job = await getScrapeQueue().getJob(id);

|

const job = await getScrapeQueue().getJob(id);

|

||||||

|

|

@ -92,7 +94,8 @@ export async function crawlStatusController(req: RequestWithAuth<CrawlStatusPara

|

||||||

|

|

||||||

const data = doneJobs.map(x => x.returnvalue);

|

const data = doneJobs.map(x => x.returnvalue);

|

||||||

|

|

||||||

const nextURL = new URL(`${req.protocol}://${req.get("host")}/v1/crawl/${req.params.jobId}`);

|

const protocol = process.env.ENV === "local" ? req.protocol : "https";

|

||||||

|

const nextURL = new URL(`${protocol}://${req.get("host")}/v1/crawl/${req.params.jobId}`);

|

||||||

|

|

||||||

nextURL.searchParams.set("skip", (start + data.length).toString());

|

nextURL.searchParams.set("skip", (start + data.length).toString());

|

||||||

|

|

||||||

|

|

@ -111,6 +114,7 @@ export async function crawlStatusController(req: RequestWithAuth<CrawlStatusPara

|

||||||

}

|

}

|

||||||

|

|

||||||

res.status(200).json({

|

res.status(200).json({

|

||||||

|

success: true,

|

||||||

status,

|

status,

|

||||||

completed: doneJobsLength,

|

completed: doneJobsLength,

|

||||||

total: jobIDs.length,

|

total: jobIDs.length,

|

||||||

|

|

|

||||||

|

|

@ -155,10 +155,12 @@ export async function crawlController(

|

||||||

await callWebhook(req.auth.team_id, id, null, req.body.webhook, true, "crawl.started");

|

await callWebhook(req.auth.team_id, id, null, req.body.webhook, true, "crawl.started");

|

||||||

}

|

}

|

||||||

|

|

||||||

|

const protocol = process.env.ENV === "local" ? req.protocol : "https";

|

||||||

|

|

||||||

return res.status(200).json({

|

return res.status(200).json({

|

||||||

success: true,

|

success: true,

|

||||||

id,

|

id,

|

||||||

url: `${req.protocol}://${req.get("host")}/v1/crawl/${id}`,

|

url: `${protocol}://${req.get("host")}/v1/crawl/${id}`,

|

||||||

});

|

});

|

||||||

}

|

}

|

||||||

|

|

||||||

|

|

|

||||||

|

|

@ -19,8 +19,15 @@ import { billTeam } from "../../services/billing/credit_billing";

|

||||||

import { logJob } from "../../services/logging/log_job";

|

import { logJob } from "../../services/logging/log_job";

|

||||||

import { performCosineSimilarity } from "../../lib/map-cosine";

|

import { performCosineSimilarity } from "../../lib/map-cosine";

|

||||||

import { Logger } from "../../lib/logger";

|

import { Logger } from "../../lib/logger";

|

||||||

|

import Redis from "ioredis";

|

||||||

|

|

||||||

configDotenv();

|

configDotenv();

|

||||||

|

const redis = new Redis(process.env.REDIS_URL);

|

||||||

|

|

||||||

|

// Max Links that /map can return

|

||||||

|

const MAX_MAP_LIMIT = 5000;

|

||||||

|

// Max Links that "Smart /map" can return

|

||||||

|

const MAX_FIRE_ENGINE_RESULTS = 1000;

|

||||||

|

|

||||||

export async function mapController(

|

export async function mapController(

|

||||||

req: RequestWithAuth<{}, MapResponse, MapRequest>,

|

req: RequestWithAuth<{}, MapResponse, MapRequest>,

|

||||||

|

|

@ -30,8 +37,7 @@ export async function mapController(

|

||||||

|

|

||||||

req.body = mapRequestSchema.parse(req.body);

|

req.body = mapRequestSchema.parse(req.body);

|

||||||

|

|

||||||

|

const limit: number = req.body.limit ?? MAX_MAP_LIMIT;

|

||||||

const limit : number = req.body.limit ?? 5000;

|

|

||||||

|

|

||||||

const id = uuidv4();

|

const id = uuidv4();

|

||||||

let links: string[] = [req.body.url];

|

let links: string[] = [req.body.url];

|

||||||

|

|

@ -47,24 +53,61 @@ export async function mapController(

|

||||||

|

|

||||||

const crawler = crawlToCrawler(id, sc);

|

const crawler = crawlToCrawler(id, sc);

|

||||||

|

|

||||||

const sitemap = req.body.ignoreSitemap ? null : await crawler.tryGetSitemap();

|

|

||||||

|

|

||||||

if (sitemap !== null) {

|

|

||||||

sitemap.map((x) => {

|

|

||||||

links.push(x.url);

|

|

||||||

});

|

|

||||||

}

|

|

||||||

|

|

||||||

let urlWithoutWww = req.body.url.replace("www.", "");

|

let urlWithoutWww = req.body.url.replace("www.", "");

|

||||||

|

|

||||||

let mapUrl = req.body.search

|

let mapUrl = req.body.search

|

||||||

? `"${req.body.search}" site:${urlWithoutWww}`

|

? `"${req.body.search}" site:${urlWithoutWww}`

|

||||||

: `site:${req.body.url}`;

|

: `site:${req.body.url}`;

|

||||||

// www. seems to exclude subdomains in some cases

|

|

||||||

const mapResults = await fireEngineMap(mapUrl, {

|

const resultsPerPage = 100;

|

||||||

// limit to 50 results (beta)

|

const maxPages = Math.ceil(Math.min(MAX_FIRE_ENGINE_RESULTS, limit) / resultsPerPage);

|

||||||

numResults: Math.min(limit, 50),

|

|

||||||

});

|

const cacheKey = `fireEngineMap:${mapUrl}`;

|

||||||

|

const cachedResult = await redis.get(cacheKey);

|

||||||

|

|

||||||

|

let allResults: any[];

|

||||||

|

let pagePromises: Promise<any>[];

|

||||||

|

|

||||||

|

if (cachedResult) {

|

||||||

|

allResults = JSON.parse(cachedResult);

|

||||||

|

} else {

|

||||||

|

const fetchPage = async (page: number) => {

|

||||||

|

return fireEngineMap(mapUrl, {

|

||||||

|

numResults: resultsPerPage,

|

||||||

|

page: page,

|

||||||

|

});

|

||||||

|

};

|

||||||

|

|

||||||

|

pagePromises = Array.from({ length: maxPages }, (_, i) => fetchPage(i + 1));

|

||||||

|

allResults = await Promise.all(pagePromises);

|

||||||

|

|

||||||

|

await redis.set(cacheKey, JSON.stringify(allResults), "EX", 24 * 60 * 60); // Cache for 24 hours

|

||||||

|

}

|

||||||

|

|

||||||

|

// Parallelize sitemap fetch with serper search

|

||||||

|

const [sitemap, ...searchResults] = await Promise.all([

|

||||||

|

req.body.ignoreSitemap ? null : crawler.tryGetSitemap(),

|

||||||

|

...(cachedResult ? [] : pagePromises),

|

||||||

|

]);

|

||||||

|

|

||||||

|

if (!cachedResult) {

|

||||||

|

allResults = searchResults;

|

||||||

|

}

|

||||||

|

|

||||||

|

if (sitemap !== null) {

|

||||||

|

sitemap.forEach((x) => {

|

||||||

|

links.push(x.url);

|

||||||

|

});

|

||||||

|

}

|

||||||

|

|

||||||

|

let mapResults = allResults

|

||||||

|

.flat()

|

||||||

|

.filter((result) => result !== null && result !== undefined);

|

||||||

|

|

||||||

|

const minumumCutoff = Math.min(MAX_MAP_LIMIT, limit);

|

||||||

|

if (mapResults.length > minumumCutoff) {

|

||||||

|

mapResults = mapResults.slice(0, minumumCutoff);

|

||||||

|

}

|

||||||

|

|

||||||

if (mapResults.length > 0) {

|

if (mapResults.length > 0) {

|

||||||

if (req.body.search) {

|

if (req.body.search) {

|

||||||

|

|

@ -84,11 +127,19 @@ export async function mapController(

|

||||||

// Perform cosine similarity between the search query and the list of links

|

// Perform cosine similarity between the search query and the list of links

|

||||||

if (req.body.search) {

|

if (req.body.search) {

|

||||||

const searchQuery = req.body.search.toLowerCase();

|

const searchQuery = req.body.search.toLowerCase();

|

||||||

|

|

||||||

links = performCosineSimilarity(links, searchQuery);

|

links = performCosineSimilarity(links, searchQuery);

|

||||||

}

|

}

|

||||||

|

|

||||||

links = links.map((x) => checkAndUpdateURLForMap(x).url.trim());

|

links = links

|

||||||

|

.map((x) => {

|

||||||

|

try {

|

||||||

|

return checkAndUpdateURLForMap(x).url.trim();

|

||||||

|

} catch (_) {

|

||||||

|

return null;

|

||||||

|

}

|

||||||

|

})

|

||||||

|

.filter((x) => x !== null);

|

||||||

|

|

||||||

// allows for subdomains to be included

|

// allows for subdomains to be included

|

||||||

links = links.filter((x) => isSameDomain(x, req.body.url));

|

links = links.filter((x) => isSameDomain(x, req.body.url));

|

||||||

|

|

@ -101,8 +152,10 @@ export async function mapController(

|

||||||

// remove duplicates that could be due to http/https or www

|

// remove duplicates that could be due to http/https or www

|

||||||

links = removeDuplicateUrls(links);

|

links = removeDuplicateUrls(links);

|

||||||

|

|

||||||

billTeam(req.auth.team_id, 1).catch(error => {

|

billTeam(req.auth.team_id, 1).catch((error) => {

|

||||||

Logger.error(`Failed to bill team ${req.auth.team_id} for 1 credit: ${error}`);

|

Logger.error(

|

||||||

|

`Failed to bill team ${req.auth.team_id} for 1 credit: ${error}`

|

||||||

|

);

|

||||||

// Optionally, you could notify an admin or add to a retry queue here

|

// Optionally, you could notify an admin or add to a retry queue here

|

||||||

});

|

});

|

||||||

|

|

||||||

|

|

@ -110,7 +163,7 @@ export async function mapController(

|

||||||

const timeTakenInSeconds = (endTime - startTime) / 1000;

|

const timeTakenInSeconds = (endTime - startTime) / 1000;

|

||||||

|

|

||||||

const linksToReturn = links.slice(0, limit);

|

const linksToReturn = links.slice(0, limit);

|

||||||

|

|

||||||

logJob({

|

logJob({

|

||||||

job_id: id,

|

job_id: id,

|

||||||

success: links.length > 0,

|

success: links.length > 0,

|

||||||

|

|

@ -134,3 +187,51 @@ export async function mapController(

|

||||||

scrape_id: req.body.origin?.includes("website") ? id : undefined,

|

scrape_id: req.body.origin?.includes("website") ? id : undefined,

|

||||||

});

|

});

|

||||||

}

|

}

|

||||||

|

|

||||||

|

// Subdomain sitemap url checking

|

||||||

|

|

||||||

|

// // For each result, check for subdomains, get their sitemaps and add them to the links

|

||||||

|

// const processedUrls = new Set();

|

||||||

|

// const processedSubdomains = new Set();

|

||||||

|

|

||||||

|

// for (const result of links) {

|

||||||

|

// let url;

|

||||||

|

// let hostParts;

|

||||||

|

// try {

|

||||||

|

// url = new URL(result);

|

||||||

|

// hostParts = url.hostname.split('.');

|

||||||

|

// } catch (e) {

|

||||||

|

// continue;

|

||||||

|

// }

|

||||||

|

|

||||||

|

// console.log("hostParts", hostParts);

|

||||||

|

// // Check if it's a subdomain (more than 2 parts, and not 'www')

|

||||||

|

// if (hostParts.length > 2 && hostParts[0] !== 'www') {

|

||||||

|

// const subdomain = hostParts[0];

|

||||||

|

// console.log("subdomain", subdomain);

|

||||||

|

// const subdomainUrl = `${url.protocol}//${subdomain}.${hostParts.slice(-2).join('.')}`;

|

||||||

|

// console.log("subdomainUrl", subdomainUrl);

|

||||||

|

|

||||||

|

// if (!processedSubdomains.has(subdomainUrl)) {

|

||||||

|

// processedSubdomains.add(subdomainUrl);

|

||||||

|

|

||||||

|

// const subdomainCrawl = crawlToCrawler(id, {

|

||||||

|

// originUrl: subdomainUrl,

|

||||||

|

// crawlerOptions: legacyCrawlerOptions(req.body),

|

||||||

|

// pageOptions: {},

|

||||||

|

// team_id: req.auth.team_id,

|

||||||

|

// createdAt: Date.now(),

|

||||||

|

// plan: req.auth.plan,

|

||||||

|

// });

|

||||||

|

// const subdomainSitemap = await subdomainCrawl.tryGetSitemap();

|

||||||

|

// if (subdomainSitemap) {

|

||||||

|

// subdomainSitemap.forEach((x) => {

|

||||||

|

// if (!processedUrls.has(x.url)) {

|

||||||

|

// processedUrls.add(x.url);

|

||||||

|

// links.push(x.url);

|

||||||

|

// }

|

||||||

|

// });

|

||||||

|

// }

|

||||||

|

// }

|

||||||

|

// }

|

||||||

|

// }

|

||||||

|

|

|

||||||

|

|

@ -103,7 +103,7 @@ export async function scrapeController(

|

||||||

return;

|

return;

|

||||||

}

|

}

|

||||||

if(req.body.extract && req.body.formats.includes("extract")) {

|

if(req.body.extract && req.body.formats.includes("extract")) {

|

||||||

creditsToBeBilled = 50;

|

creditsToBeBilled = 5;

|

||||||

}

|

}

|

||||||

|

|

||||||

billTeam(req.auth.team_id, creditsToBeBilled).catch(error => {

|

billTeam(req.auth.team_id, creditsToBeBilled).catch(error => {

|

||||||

|

|

|

||||||

|

|

@ -30,7 +30,14 @@ export const url = z.preprocess(

|

||||||

"URL must have a valid top-level domain or be a valid path"

|

"URL must have a valid top-level domain or be a valid path"

|

||||||

)

|

)

|

||||||

.refine(

|

.refine(

|

||||||

(x) => checkUrl(x as string),

|

(x) => {

|

||||||

|

try {

|

||||||

|

checkUrl(x as string)

|

||||||

|

return true;

|

||||||

|

} catch (_) {

|

||||||

|

return false;

|

||||||

|

}

|

||||||

|

},

|

||||||

"Invalid URL"

|

"Invalid URL"

|

||||||

)

|

)

|

||||||

.refine(

|

.refine(

|

||||||

|

|

@ -63,7 +70,8 @@ export const scrapeOptions = z.object({

|

||||||

])

|

])

|

||||||

.array()

|

.array()

|

||||||

.optional()

|

.optional()

|

||||||

.default(["markdown"]),

|

.default(["markdown"])

|

||||||

|

.refine(x => !(x.includes("screenshot") && x.includes("screenshot@fullPage")), "You may only specify either screenshot or screenshot@fullPage"),

|

||||||

headers: z.record(z.string(), z.string()).optional(),

|

headers: z.record(z.string(), z.string()).optional(),

|

||||||

includeTags: z.string().array().optional(),

|

includeTags: z.string().array().optional(),

|

||||||

excludeTags: z.string().array().optional(),

|

excludeTags: z.string().array().optional(),

|

||||||

|

|

@ -257,6 +265,7 @@ export type CrawlStatusParams = {

|

||||||

export type CrawlStatusResponse =

|

export type CrawlStatusResponse =

|

||||||

| ErrorResponse

|

| ErrorResponse

|

||||||

| {

|

| {

|

||||||

|

success: true;

|

||||||

status: "scraping" | "completed" | "failed" | "cancelled";

|

status: "scraping" | "completed" | "failed" | "cancelled";

|

||||||

completed: number;

|

completed: number;

|

||||||

total: number;

|

total: number;

|

||||||

|

|

@ -322,6 +331,7 @@ export function legacyScrapeOptions(x: ScrapeOptions): PageOptions {

|

||||||

removeTags: x.excludeTags,

|

removeTags: x.excludeTags,

|